Article and photos by Marcus Siu

In the very early days of the the film industry, motion capture was accomplished via rotoscoping, invented by Animator Max Fleischer in 1919. Rotoscoping is the process of recording actual footage, and tracing over this footage frame-by-frame. In those days, animators need to hand-draw each frame, which is a laborious and time-consuming process

A lot has happened since then.

In 2002, when Gollum was introduced to the world in “The Lord of the Rings – The Two Towers”, it took audiences by surprise how amazing CGI characters could be so lifelike. Andy Serkis, the pioneering actor who would become a household name for his performance/motion capture work, proved that characters could be fully realized and emotionally compelling. His work as Gollum was instrumental to the success of “The Lord of the Rings” trilogy, as was his portrayal of Caesar from the latest “Planet of the Apes” trilogy, which most thought made the remake of the franchise vastly superior than the original.

To those who aren’t familiar, motion capture is the process where actors’ physical movements are captured and then recreated on digital character models allowing actors to give realistic movement to fantastical CGI creatures, bringing them to life. The Marvel Comic Universe, for example, would have been nearly impossible to create, if it wasn’t for this process.

In contrast to gaming, by recording human actions and using them to animate digital models, 3D artists bring deep realism to video game characters. Performance motion capture technology allows artists to create animated image models directly from actors’ movement and facial expressions. More than 90% of the top selling games are dependent on motion capture.

Now one hundred years later since rotoscoping, motion capture now has evolved into “real time”, which was the theme at this years Games Developer’s Conference.

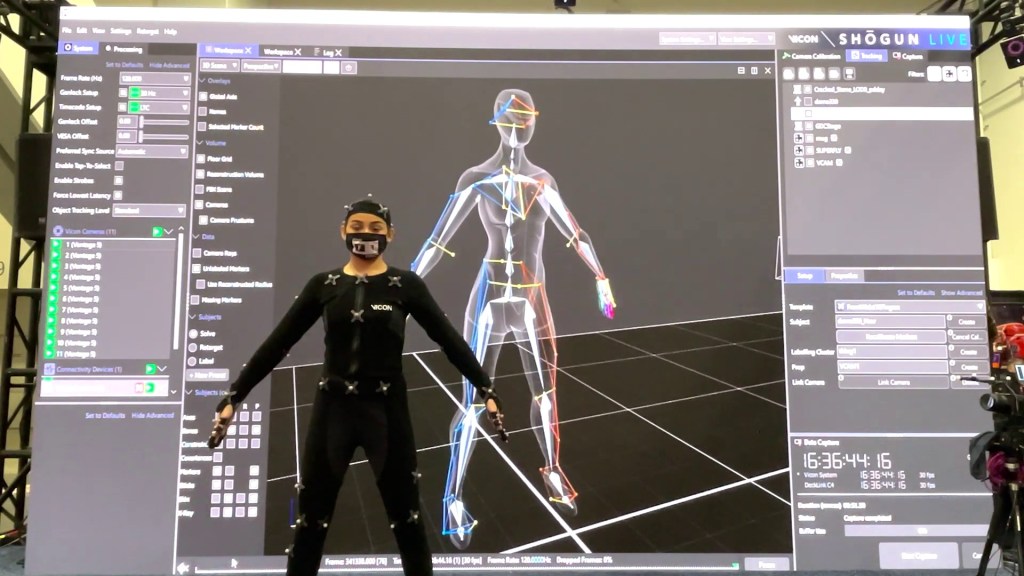

Setting up a motion capture is fairly simple. You will need a Optical motion capture software on your computer, a room with a infrared camera system (along with the ground and forward axis), an actor in a mo-cap body suit with markers, assigning an A-Pose or T-Pose to the skeleton for calibration to the software…and you are ready to go live. After motion capture is done, you are ready to import everything into the Unity or Unreal engine and assign animations to a character. Easy peasy.

VICON

I got a chance to chat with Jeffrey Ovadya, the director of Sales Support and Marketing for Vicon Motion Systems, the global leader in Motion Capture Cameras, software and Motion Capture Systems for the VFX, life science and engineering industries for the past 35 years.

Q: So tell us about the latest Vicon project at GDC…

A: We’ve got Two complete demonstrations right now.

We have partnered with ROE Visual who have given us 60 panels in this 16 X 19 foot LED power wall so we can showcase some virtual production pipeline using a virtual camera running in real time with a game that our team created – Mitchell Whitmore and Connor ended up making this racing game here that’s all live and Unreal. We’re letting people not only drive the racing simulation in Unreal live but also change the camera angle so that you can see the perspective on the ROE Powerwall. ROE is the the LED panel manufacturer that’s used in “The Mandalorian” and all of those other shows as well so this is a really fun demo.

Then later on today we’re gonna do a traditional Performance Capture presentation where we showcase the latest in our Shogun software and we’re going to have some talent come in. We’re going to do live 10 finger capture, we’re going to show some new STI camera calibration for virtual production, pipeline some new auto skeleton features just things to make performance capture, motion capture and virtual production easier across the board with the best product in the industry.

Here is a two minute video that captures both demo’s.

Other noteworthy demo’s on the GDC 2022 floor include, OptiTrack, Qualisys, and Manus below.